This guide is based on my talk at the Civo Community meetup on 29 July 2020.

Monitoring is crucial for managing any system. Prometheus, the de-facto standard, can be complicated to get started with, which is why many people pick hosted monitoring solutions like Datadog. However it doesn't have to be too complicated, and if you're monitoring Kubernetes, Prometheus is in my opinion the best option.

The great people over at CoreOS developed a Prometheus Operator for Kubernetes which allows you to define your Prometheus configuration in YAML and deploy it alongside your application manifests. This makes a lot of sense if you're deploying a lot of applications, maybe across many teams. They can all just define their own monitoring alerts.

We're going to go customize a Prometheus monitoring setup that includes only the parts and alerts we want to use, rather than the full-fat Prometheus setup that may be overkill for k3s clusters. Additionally, we're going to set up a Watchdog alert to an external monitor to notify us if the cluster itself is experiencing issues.

You will need:

- A k3s cluster running on Civo. You can sign up to Civo here.

- kubectl installed and configured to point to your cluster.

- Helm 3 installed on your machine.

- A copy of my demo repository to work from

git cloned to your machine.

For this guide, I'm using MailHog to receive alerts as it's simple. However you might choose to hook this monitoring into your mail provider to send emails (for gmail, see the commented-out lines here) or send a Slack message (see Prometheus documentation).

To install MailHog:

helm repo add codecentric https://codecentric.github.io/helm-charts

helm upgrade --install mailhog codecentric/mailhog

Take a look at how you will be able to see the alerts sent to MailHog here.

Installing Prometheus Operator

In order for us to customize our Prometheus setup, we'll need to install it to our cluster using Helm:

helm repo add stable https://kubernetes-charts.storage.googleapis.com/

helm upgrade --install prometheus stable/prometheus-operator --values prometheus-operator-values.yaml

The above command deploys Prometheus, Alert Manager and Grafana with a few options disabled which don't work for k3s. You'll get a set of default Prometheus Rules (Alerts) configured which will alert you about most of things you need to worry about when running a Kubernetes cluster.

There are a few commented-out sections like CPU and Memory resource requests and limits which you should definitely set when you know the resources each service needs in your specific environment.

I also recommend setting up some Pod Priority Classes in your cluster and making the core parts of the system a high priority so if the cluster is low on resources Prometheus will still run and alert you.

Under routes you will see I've sent a few of the default Prometheus Rules to the null receiver which effectively mutes them. You might choose to remove some of these or add different alerts to the list:

routes:

- match:

alertname: Watchdog

receiver: 'null'

- match:

alertname: CPUThrottlingHigh

receiver: 'null'

- match:

alertname: KubeMemoryOvercommit

receiver: 'null'

- match:

alertname: KubeCPUOvercommit

receiver: 'null'

- match:

alertname: KubeletTooManyPods

receiver: 'null'

If you make changes to the file after you have deployed it, just re-run helm upgrade --install prometheus stable/prometheus-operator --values prometheus-operator-values.yaml from above to apply your new changes.

Accessing Prometheus, Alert Manager and Grafana

You will have noticed that I haven't configured any Ingress or Load Balancers for access to the services in the values file we have been playing with above. This is because Prometheus and Alert Manager don't support any authentication out of the box, and Grafana will be spun up with default credentials (Username: admin and Password: prom-operator). If you were running this in production, you could set up basic authentication using Traefik, or fuller authentication through something like oauth2-proxy.

This means you need to use kubectl port-forward to access the services for now. In separate terminal windows run the following commands:

kubectl port-forward svc/prometheus-grafana 8080:80

kubectl port-forward svc/prometheus-prometheus-oper-prometheus 9090

kubectl port-forward svc/prometheus-prometheus-oper-alertmanager 9093

This will make Grafana accessible on http://localhost:8080, Prometheus on http://localhost:9090 and Alert Manager on http://localhost:9093

You'll see that Grafana is already configured with lots of useful dashboards and Prometheus is configured with Rules to send alerts for pretty much everything you need to monitor in a production cluster.

The power of Prometheus Operator

Because k3s uses Traefik as an ingress controller out of the box, we want to add monitoring to that. Prometheus "scrapes" services to get metrics rather than having metrics pushed to it like many other systems Many "cloud native" applications will expose a port for Prometheus metrics by default, and Traefik is no exception.

If you are developing your own apps with this mindset, anything you build will need a metrics endpoint and a Kubernetes Service with that port exposed. Once you have done that, Prometheus will be able to scrape those metrics, just like with our Traefik example below:

All we need to do to get Prometheus scraping Traefik is add a Prometheus-Operator ServiceMonitor resource which tells it the details of the existing service to scrape. I have defined one in the repository in traefik-servicemonitor.yaml. It is a short file that tells Prometheus that Traefik is sending metrics on a specific port in the kube-system namespace:

# traefik-servicemonitor.yaml

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

app: traefik

release: prometheus

name: traefik

spec:

endpoints:

- port: metrics

namespaceSelector:

matchNames:

- kube-system

selector:

matchLabels:

app: traefik

We can get it up and running by applying it to our cluster:

kubectl apply -f traefik-servicemonitor.yaml

You can also do something similar with Grafana dashboards. Just deploy them in a ConfigMap like this:

kubectl apply -f traefik-dashboard.yaml

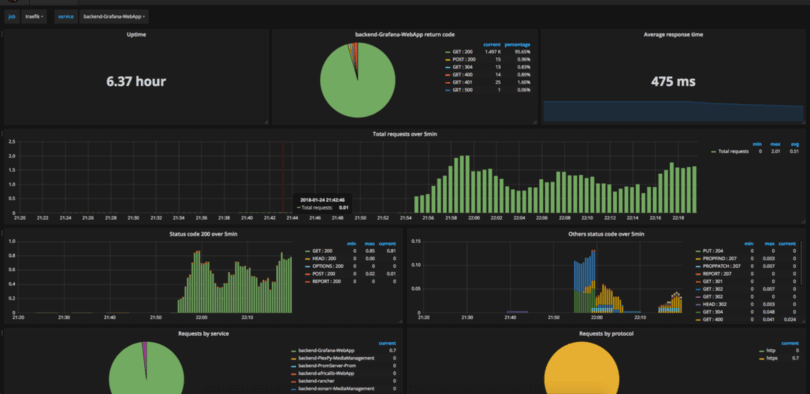

I got the contents of the traefik-dashboard.yaml file that defines the graphs for Grafana from Grafana's amazing dashboard site, and will give you visualizations like this:

You can, of course, customize the graphs to your heart's content by changing the values in the dashboard file. But note that as Grafana is not configured with any persistent storage, any dashboards imported or created and not put in a ConfigMap will disappear if the Pod restarts.

Blackbox Exporter

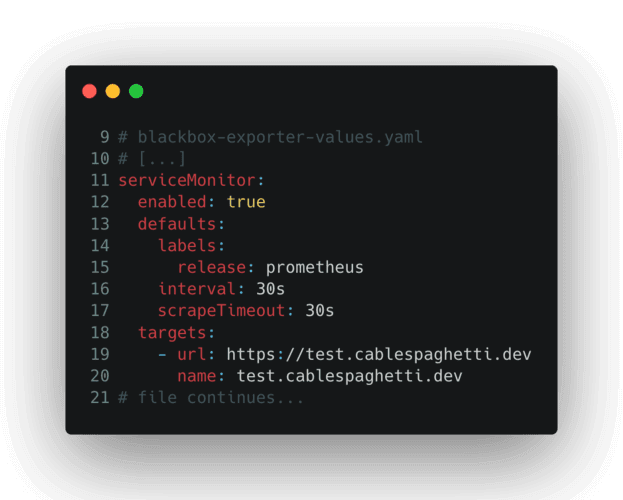

I've also configured Prometheus Blackbox exporter on my cluster which polls HTTP endpoints. These can be anywhere on the Internet. In this case I'm just monitoring my example website to check everything is working as expected. I've also deployed another dashboard to Grafana for it.

Go ahead and edit blackbox-exporter-values.yaml to match a domain for a site you want to monitor, specifically lines 19-20:

You will then be able to add this to your monitoring system by adding the Blackbox Exporter with the specified values to your cluster:

helm upgrade --install blackbox-exporter stable/prometheus-blackbox-exporter --values blackbox-exporter-values.yaml

kubectl apply -f blackbox-exporter-dashboard.yaml

This will result in notifications sent to you if your site becomes unreachable or returns an error HTTP status. A little bit like your personal uptime monitor.

Monitoring the Monitoring

All of the above is good: we can monitor the cluster itself, we can be notified if site(s) external to the cluster go down, but what if the cluster goes down and the monitoring goes with it? One of the alerts we have sent to the null receiver in the Prometheus Operator values is Watchdog. This is a Prometheus Rule which always fires, as long as the system is up. If you send this to somewhere outside of your cluster, you can be alerted if this "Dead Man's Switch" stops firing at any point, indicating your cluster has become unreachable.

At my employer, PulseLive, we developed a simple solution for this, allowing a watchdog webhook to send an alert if it no longer receives a message from the cluster. You can easily deploy your own version of this on another platform of your choice.