Introduction

In this learn guide you will get up and running with Longhorn from Rancher Labs on Civo using K3s, a lightweight Kubernetes-compatible daemon.

For this, you'll need a Kubernetes cluster, but we'll link to Rancher's guide on installing K3s. We'll then install Longhorn and give an example of how to use it.

One of the principles of Cloud Native applications is that they aim to be stateless, so you can purely horizontally scale your application. However, unless your site is just a brochure, you need to store things somewhere!

The big gorillas in our industry (such as Google and Amazon) have custom systems with their own scalable storage solutions to plug in, but what about options for the dev clusters, the small shops, the tinkerers...

Rancher Labs announced their Project Longhorn in March 2018 to try to fill this gap. What Longhorn does is use the existing disks of your Kubernetes nodes to provide replicated and stable storage for your Kubernetes pods.

Claim your free credits for Civo.com

For this guide we will use Civo. If you aren't yet a Civo, you can sign up here.

Longhorn Installation Prerequisites

Before we can play with Longhorn, you need to have a Kubernetes cluster up and running. You can do this with a simple manually installed K3s cluster or if you're a tester of our Kubernetes service, you can use that. Although it's not publicly available, we'll create the cluster with our Kubernetes service for ease (although the steps on the K3s link above are really easy to follow manually).

We'd recommend using a minimum of Medium instances because we'll be testing stateful storage for MySQL and it can be RAM hungry.

$ civo k8s create longhorn-test --wait

Building new Kubernetes cluster longhorn-test: \

Created Kubernetes cluster longhorn-test

Your cluster needs to have open-iscsi installed on each of the nodes, so if you aren't using our Kubernetes service you also will need to run the following on each node, in addition to the instructions linked above:

sudo apt-get install open-iscsi

You need to have a Kubernetes configuration file either downloaded and saved to ~/.kube/config or set an environment variable called KUBECONFIG to its filename:

cd ~/longhorn-play

civo k8s config longhorn-test > civo-longhorn-test-config

export KUBECONFIG=civo-longhorn-test-config

Installing Rancher Longhorn

There are two steps to installing Longhorn on an existing Kubernetes cluster - install the controller and extensions for Longhorn, and then create a StorageClass so it's usable by pods. The first step is as simple as:

$ kubectl apply -f https://raw.githubusercontent.com/rancher/longhorn/master/deploy/longhorn.yaml

namespace/longhorn-system created

serviceaccount/longhorn-service-account created

...

To create the StorageClass is another one command away, however as an additional step you can make the new class the default, so you don't need to specify it every time:

$ kubectl apply -f https://raw.githubusercontent.com/rancher/longhorn/master/examples/storageclass.yaml

storageclass.storage.k8s.io/longhorn created

$ kubectl get storageclass

NAME PROVISIONER AGE

longhorn rancher.io/longhorn 3s

$ kubectl patch storageclass longhorn -p \

'{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

storageclass.storage.k8s.io/longhorn patched

$ kubectl get storageclass

NAME PROVISIONER AGE

longhorn (default) rancher.io/longhorn 72s

Accessing Longhorn's dashboard

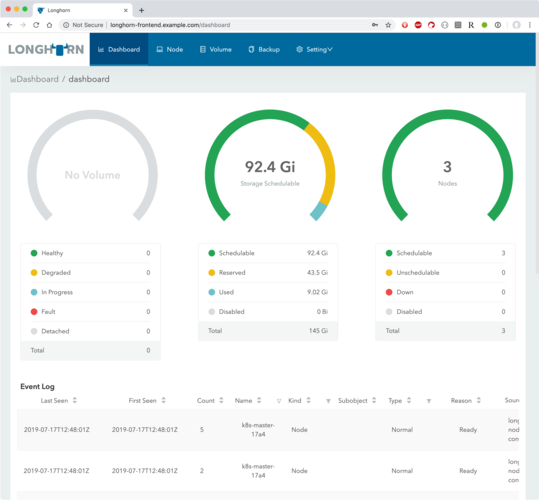

Longhorn has a lovely simple dashboard that lets you see used space, available space, volume listing, etc. First off, we need to create the authentication details (so the whole world can't see it):

$ htpasswd -c ./ing-auth admin

$ kubectl create secret generic longhorn-auth \

--from-file ing-auth --namespace=longhorn-system

Now we'll create an Ingress object that uses K3s' built-in Traefik to expose the dashboard to the outside world. Make a file called longhorn-ingress.yaml and put this in it:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: longhorn-ingress

annotations:

ingress.kubernetes.io/auth-type: "basic"

ingress.kubernetes.io/auth-secret: "longhorn-auth"

spec:

rules:

- host: longhorn-frontend.example.com

http:

paths:

- backend:

serviceName: longhorn-frontend

servicePort: 80

Then apply it with:

$ kubectl apply -f longhorn-ingress.yaml -n longhorn-system

ingress.extensions/longhorn-ingress created

You now need to add an entry to your /etc/hosts file to point any one of your Kubernetes IP addresses to longhorn-frontend.example.com:

echo "1.2.3.4 longhorn-frontend.example.com" >> /etc/hosts

Now you can visit https://longhorn-frontend.example.com in your browser, and after authenticating with admin and the password you typed when using htpasswd and see something like:

Installing MySQL with persistent storage

There's not much point in running MySQL in a single container if when the underlying node (or the container) dies you lose all of your customers, orders, etc. So let's configure it with a new Longhorn persistent volume.

First, we need to create a few resources in Kubernetes. Each of these are a yaml file in a single empty directory, or you can put them all in a single file separated with a line containing just ---.

A persistent volume in mysql/pv.yaml:

apiVersion: v1

kind: PersistentVolume

metadata:

name: mysql-pv

namespace: apps

labels:

name: mysql-data

type: longhorn

spec:

capacity:

storage: 5G

volumeMode: Filesystem

storageClassName: longhorn

accessModes:

- ReadWriteOnce

csi:

driver: io.rancher.longhorn

fsType: ext4

volumeAttributes:

numberOfReplicates: '2'

staleReplicaTimeout: '20'

volumeHandle: mysql-data

A claim to that volume (like an abstract request so that something can use the volume) in mysql/pv-claim.yaml:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mysql-pv-claim

labels:

type: longhorn

app: example

spec:

storageClassName: longhorn

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

And a pod that will run MySQL and use the claim to the volume above (note: we use password here as the MySQL root password, but really you should use a secure password AND you should use Kubernetes secrets to store it, not in the YAML file - we're just keeping it simple here) in mysql/pod.yaml:

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-mysql

labels:

app: example

spec:

selector:

matchLabels:

app: example

tier: mysql

strategy:

type: Recreate

template:

metadata:

labels:

app: example

tier: mysql

spec:

containers:

- image: mysql:5.6

name: mysql

env:

- name: MYSQL_ROOT_PASSWORD

value: password

ports:

- containerPort: 3306

name: mysql

volumeMounts:

- name: mysql-persistent-storage

mountPath: /var/lib/mysql

volumes:

- name: mysql-persistent-storage

persistentVolumeClaim:

claimName: mysql-pv-claim

Now apply either the folder or the single file (depending on which you chose):

$ kubectl apply -f mysql.yaml

persistentvolumeclaim/mysql-pv-claim created

persistentvolume/mysql-pv created

deployment.apps/my-mysql created

# or

kubectl apply -f ./mysql/

persistentvolumeclaim/mysql-pv-claim created

persistentvolume/mysql-pv created

deployment.apps/my-mysql created

Testing MySQL with persistent storage works

Our test here will be simple, we'll create a new database, delete the container (which Kubernetes will recreate for us) and then connect back in and hopefully still see our new database.

Let's create a new database called should_still_be_here (great name for our production DB!)

$ kubectl get pods | grep mysql

my-mysql-d59b9487b-7g644 1/1 Running 0 2m28s

$ kubectl exec -it my-mysql-d59b9487b-7g644 /bin/bash

root@my-mysql-d59b9487b-7g644:/# mysql -u root -p mysql

Enter password:

mysql> create database should_still_be_here;

Query OK, 1 row affected (0.00 sec)

mysql> show databases;

+----------------------+

| Database |

+----------------------+

| information_schema |

| #mysql50#lost+found |

| mysql |

| performance_schema |

| should_still_be_here |

+----------------------+

5 rows in set (0.00 sec)

mysql> exit

Bye

root@my-mysql-d59b9487b-7g644:/# exit

exit

Now we'll delete the container:

kubectl delete pod my-mysql-d59b9487b-7g644

After a minute or so, we'll look again for the new container name, connect to it and see if our database still exists:

$ kubectl get pods | grep mysql

my-mysql-d59b9487b-8zsn2 1/1 Running 0 84s

$ kubectl exec -it my-mysql-d59b9487b-8zsn2 /bin/bash

root@my-mysql-d59b9487b-8zsn2:/# mysql -u root -p mysql

Enter password:

mysql> show databases;

+----------------------+

| Database |

+----------------------+

| information_schema |

| #mysql50#lost+found |

| mysql |

| performance_schema |

| should_still_be_here |

+----------------------+

5 rows in set (0.00 sec)

mysql> exit

Bye

root@my-mysql-d59b9487b-7g644:/# exit

exit

So that was a complete success, our storage persisted across containers being killed.

Wrapping up

We've now installed scalable cloud storage on a Kubernetes cluster and ensured it works by deleting our container and watching our existing state still exist.

If you would like to continue learning you can:

- Try deleting and recreating one of your Kubernetes nodes and see if the storage persists

- Join our Community to chat with the other cloud native developers

Let us know how you get on by tweeting us @CivoCloud!