When moving production workloads to a new containerized environment, application traffic management (ATM) can become complex. This is especially true for organizations that are transitioning workloads to Kubernetes, as managing traffic requires load balancing and configuring other Kubernetes networking components, such as ingress and ingress controllers.

Through this blog, we will take you through how to minimize these complications by utilizing one of the many ingress controllers available, the NGINX

Ingress overview

If you’ve used Kubernetes before, there’s a good chance you’ve heard about 'load balancers'. These help to balance network loads and services by distributing them in multiple instances. Despite this, Kubernetes load balancers can only route to a single service.

The 'ingress' API object displays traffic routes outside of a cluster to numerous services within the cluster. This traffic routing is controlled by a set of encapsulated rules present in the ingress resource. However, in order to satisfy the ingress, you will need an ingress controller.

What is an ingress controller?

An ingress controller is a specialized load balancer for containerized environments such as Kubernetes. It helps form a bridge between the external services and Kubernetes services by removing the complexities of Kubernetes application traffic routing. As a Kubernetes cluster component, ingress controllers help ease those complexities by configuring an HTTP load balancer based on the cluster user’s ingress class and other resources.

What are the duties of an ingress controller?

An ingress controller does a variety of work inside a Kubernetes cluster, such as:

-

Accept traffic from outside the Kubernetes platform and load balance to the pods or containers running inside the environment.

-

Configure traffic routing rules by deploying and creating ingress resources as objects based on their configuration with the Kubernetes API.

-

Monitor the pods running in Kubernetes and automatically update the load balancing rules when any pod is added or removed from a Kubernetes service.

-

Manage egress traffic – traffic transferred from a host network to an outside network – for services that need to communicate with services outside a cluster.

How can the NGINX ingress controller help?

As one of the most popular ingress controllers in the market, we will take a closer look at how the NGINX ingress controller can help a cluster user.

A Kubernetes environment is used to run the production-grade ingress controller known as NGINX. It can monitor resources to discover requests for services that need ingress load balancing, as well as control Kubernetes services traffic and manage networks within a cluster. This controller allows you to harness Kubernetes networking on the transport layer through the application layer and, as a result, enables tighter security.

How does an NGINX ingress controller work?

An NGINX ingress controller works by intercepting incoming traffic to a Kubernetes cluster and using the rules defined in the ingress resource to route the traffic to the appropriate service within the cluster. It provides a convenient way to manage external access to the services in a Kubernetes cluster and can offer additional features such as load balancing.

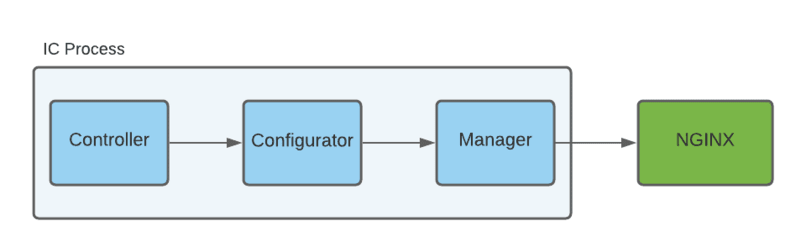

It’s typically composed of three components (the controller, configurator, and manager) which make up the control loop:

Source: NGINX official documentation

-

Controller: As the main component of the ingress controller, the controller represents the primary and helper components of the ingress controller process as an instance. The controller also includes the controller synchronization method, which is used by Workqueue, a helper component of the ingress controller process to process a changed ingress resource.

-

Configurator: Responsible for the generation of the configuration files for NGINX, the configurator uses Manager for writing the generated configuration files and helps reload NGINX. This component also generates the TLS, cert keys, and JSON Web Key Sockets (JWKs) based on the Kubernetes resource.

-

Manager: This component controls the NGINX lifecycle by managing the starting, reloading, and quitting. It also manages the configurator-generated NGINX configuration files, TLS and cert keys, and JWKs.

Using the Nginx ingress controller on Civo

Interested in learning more about using the NGINX ingress controller on Civo? See what you can do with Civo Marketplace and get production-ready Kubernetes in seconds.