Picture this: you upload a PDF to a folder, and within seconds, a structured summary appears next to it. No manual triggers. No complicated workflows. Just effortless document processing that actually works.

That’s exactly what we’re building today: an automated summarization agent running on Civo Kubernetes with GPU support. It watches a Civo Object Store bucket, detects new files, sends them to a relaxAI-powered summarizer, and stores the results as JSON sidecars. The whole thing runs 24/7, with minimal infrastructure cost, and proves that AI pipelines don't need to be enterprise nightmares.

Prerequisites

As well as having basic Kubernetes knowledge, you will need to ensure that the following is in place before we dive in:

- A Civo account (this will require access to GPU-enabled nodes in your chosen Civo region)

- Docker installed locally

- Python 3.9+ installed

- relaxAI API access

- kubectl CLI configured and ready

Project folder structure

First, let's set up our project structure. Create this locally:

civo-summarizer-agent/

├── summarizer/

│ ├── app.py

│ ├── requirements.txt

│ └── Dockerfile

├── watcher/

│ ├── watcher.py

│ ├── requirements.txt

│ └── Dockerfile

├── k8s/

│ ├── namespace.yaml

│ ├── summarizer-deployment.yaml

│ ├── watcher-deployment.yaml

│ └── secret.yaml

Step 1: Create a GPU Kubernetes cluster on Civo

First, provision a GPU-enabled cluster:

- Log in to the Civo Dashboard

- Create a new Kubernetes cluster

- Add a GPU node pool (one node is sufficient for this demo)

- Download your kubeconfig

- Connect to the cluster by running

set KUBECONFIG=path-to-kubeconfig-filein your terminal (useexportinstead ofseton Linux or macOS).

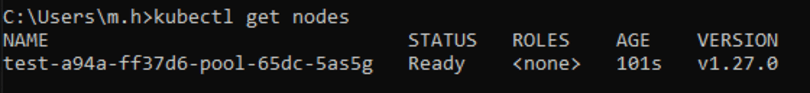

Verify connectivity:

kubectl get nodes

kubectl get pods -A

Create a namespace for our project:

Create k8s/namespace.yaml:

apiVersion: v1

kind: Namespace

metadata:

name: summarizer-agent

Apply it:

kubectl apply -f k8s/namespace.yaml

kubectl config set-context --current --namespace=summarizer-agent

Step 2: Set up Civo Object Store

Civo's Object Store is S3-compatible and simple to set up:

Create Object Store credentials:

- In the Civo dashboard, go to Object Stores → Credentials

- Click Create a new Credential

- Note down your Access Key and Secret Key (you'll need these)

Create an Object Store instance:

- Go to Object Stores → Create an Object Store

- Name it

summarizer-store - Select the same region as your cluster (e.g.,

lon1) - Click Create store

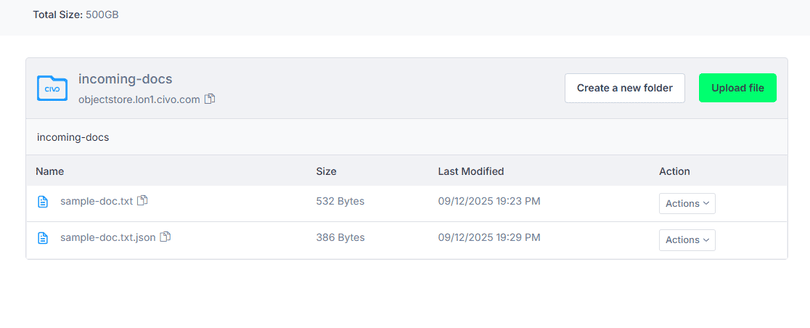

Create a bucket:

- Click on your newly created Object Store instance

- Click Create a new folder

- Name it

incoming-docs - Click Create folder

Your bucket URL will be like this: https://objectstore.lon1.civo.com/incoming-docs

lon1 with your actual region throughout this tutorial.

Step 3: Build the summarizer service (relaxAI)

This is our GPU-powered summarization engine, utilizing the relaxAI SDK.

Create summarizer/app.py:

# ============================================================

# 1. SETUP & CONFIGURATION

# Initializes FastAPI, loads API key, sets up relaxAI client,

# and defines request/response data models.

# ============================================================

from fastapi import FastAPI, HTTPException

from pydantic import BaseModel

from relaxAI import relaxAI

import os, json

from typing import List

app = FastAPI()

RELAXAI_API_KEY = os.getenv("RELAXAI_API_KEY")

client = relaxAI(api_key=RELAXAI_API_KEY) if RELAXAI_API_KEY else None

class SummarizeRequest(BaseModel):

content: str

filename: str

class SummarizeResponse(BaseModel):

title: str

summary: str

keywords: List[str]

word_count: int

filename: str

# ============================================================

# 2. API ENDPOINTS

# Provides a health check endpoint and the main summarization route.

# ============================================================

@app.get("/health")

async def health():

return {"status": "healthy", "relaxAI_configured": client is not None}

@app.post("/summarize", response_model=SummarizeResponse)

async def summarize(request: SummarizeRequest):

if not client:

raise HTTPException(status_code=500, detail="relaxAI API key not configured")

# Limit content size to avoid token overflow

content_preview = request.content[:8000]

# Build summarization prompt for the model

prompt = f"""

Analyze the following document and provide a structured summary.

Document: {request.filename}

Content:

{content_preview}

Provide your response in this exact JSON format:

{{

"title": "Generated title for the document",

"summary": "A concise 2-3 sentence summary",

"keywords": ["keyword1", "keyword2", "keyword3"],

"word_count":

}}

Return ONLY the JSON.

"""

# ============================================================

# 3. SUMMARIZATION LOGIC & ERROR HANDLING

# Sends prompt to relaxAI, cleans the response, parses JSON,

# and safely returns a validated structured summary.

# ============================================================

try:

chat_completion_response = client.chat.create_completion(

messages=[

{

"role": "system",

"content": "You are a document analysis expert. Always respond with valid JSON only."

},

{

"role": "user",

"content": prompt

}

],

model="Llama-4-Maverick-17B-128E",

temperature=0.3,

max_tokens=500

)

# Extract raw AI response

response_content = chat_completion_response.choices[0].message.content.strip()

# Remove markdown code fences if present

if response_content.startswith("```json"):

response_content = response_content[7:]

if response_content.startswith("```"):

response_content = response_content[3:]

if response_content.endswith("```"):

response_content = response_content[:-3]

response_content = response_content.strip()

# Parse model JSON output

summary_data = json.loads(response_content)

summary_data["filename"] = request.filename

return SummarizeResponse(**summary_data)

except json.JSONDecodeError as e:

raise HTTPException(500, f"Failed to parse AI response as JSON: {e}")

except Exception as e:

raise HTTPException(500, f"Summarization failed: {e}")

# Run locally if executed directly

if __name__ == "__main__":

import uvicorn

uvicorn.run(app, host="0.0.0.0", port=8000)

Create summarizer/requirements.txt:

fastapi==0.104.1

uvicorn==0.24.0

relaxAI

pydantic==2.5.0

Create summarizer/Dockerfile:

FROM python:3.11-slim

WORKDIR /app

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

COPY app.py .

EXPOSE 8000

CMD ["python", "app.py"]

Step 4: Build the watcher agent

This pod polls Civo Object Store and orchestrates the summarization workflow.

Create watcher/watcher.py:

# ============================================================

# 1. SETUP & CONFIGURATION

# Loads env variables, configures S3 client, and defines helpers.

# ============================================================

import boto3

import httpx

import json

import time

import os

from botocore.client import Config

S3_ENDPOINT = os.getenv("S3_ENDPOINT")

S3_ACCESS_KEY = os.getenv("S3_ACCESS_KEY")

S3_SECRET_KEY = os.getenv("S3_SECRET_KEY")

S3_BUCKET = os.getenv("S3_BUCKET", "incoming-docs")

SUMMARIZER_URL = os.getenv("SUMMARIZER_URL", "http://summarizer-service:8000")

POLL_INTERVAL = int(os.getenv("POLL_INTERVAL", "10"))

# Civo Object Store client

s3 = boto3.client(

's3',

endpoint_url=S3_ENDPOINT,

aws_access_key_id=S3_ACCESS_KEY,

aws_secret_access_key=S3_SECRET_KEY,

config=Config(signature_version='s3v4')

)

def get_file_content(key: str) -> str:

"""Downloads a file and returns decoded text."""

try:

response = s3.get_object(Bucket=S3_BUCKET, Key=key)

content = response['Body'].read()

try:

return content.decode('utf-8')

except UnicodeDecodeError:

return content.decode('latin-1')

except Exception as e:

print(f"Error downloading {key}: {e}")

return None

def upload_json(key: str, data: dict):

"""Uploads JSON summary back to Object Store."""

try:

s3.put_object(

Bucket=S3_BUCKET,

Key=key,

Body=json.dumps(data, indent=2),

ContentType='application/json'

)

print(f"✓ Uploaded {key}")

except Exception as e:

print(f"Error uploading {key}: {e}")

def file_needs_processing(filename: str, existing_files: set) -> bool:

"""Checks if a file doesn’t already have a .json summary."""

if filename.endswith('.json'):

return False

return f"{filename}.json" not in existing_files

# ============================================================

# 2. FILE PROCESSING LOGIC

# Downloads a file, sends to summarizer API, and uploads results.

# ============================================================

async def process_file(filename: str):

"""Handles full summarize → upload workflow for a single file."""

print(f"Processing {filename}...")

content = get_file_content(filename)

if not content:

print(f"✗ Could not read {filename}")

return

try:

async with httpx.AsyncClient(timeout=120.0) as client:

response = await client.post(

f"{SUMMARIZER_URL}/summarize",

json={"content": content, "filename": filename}

)

response.raise_for_status()

summary = response.json()

json_key = f"{filename}.json"

upload_json(json_key, summary)

print(f"✓ Successfully processed {filename}")

except httpx.TimeoutException:

print(f"✗ Timeout while processing {filename}")

except httpx.HTTPStatusError as e:

print(f"✗ HTTP error: {e.response.status_code}")

except Exception as e:

print(f"✗ Failed to process {filename}: {e}")

# ============================================================

# 3. WATCH LOOP

# Polls the bucket, finds new files, and processes them continuously.

# ============================================================

async def watch_bucket():

print("="*60)

print("CIVO SUMMARIZER WATCHER STARTED")

print("="*60)

print(f"Bucket: {S3_BUCKET}")

print(f"Endpoint: {S3_ENDPOINT}")

print(f"Polling interval: {POLL_INTERVAL}s")

print(f"Summarizer URL: {SUMMARIZER_URL}")

print("="*60)

while True:

try:

resp = s3.list_objects_v2(Bucket=S3_BUCKET)

if 'Contents' not in resp:

print("Bucket is empty, waiting...")

time.sleep(POLL_INTERVAL)

continue

existing_files = {obj['Key'] for obj in resp['Contents']}

files_to_process = [

f for f in existing_files if file_needs_processing(f, existing_files)

]

if files_to_process:

print(f"\nFound {len(files_to_process)} file(s) to process")

for f in files_to_process:

await process_file(f)

else:

print(".", end="", flush=True)

except Exception as e:

print(f"\n✗ Error in watch loop: {e}")

time.sleep(POLL_INTERVAL)

if __name__ == "__main__":

import asyncio

asyncio.run(watch_bucket())

Create watcher/requirements.txt:

boto3==1.29.7

httpx==0.25.1

Create watcher/Dockerfile:

FROM python:3.11-slim

WORKDIR /app

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

COPY watcher.py .

CMD ["python", "watcher.py"]

Step 5: Dockerization

Build and push both images to a container registry. We'll use Docker Hub for simplicity.

# Login to Docker Hub

docker login

# Build and push summarizer

cd summarizer

docker build -t yourusername/civo-summarizer:latest .

docker push yourusername/civo-summarizer:latest

# Build and push watcher

cd ../watcher

docker build -t yourusername/civo-watcher:latest .

docker push yourusername/civo-watcher:latest

cd ..

Step 6: Deployment

Now, we will deploy everything to Kubernetes with all necessary environment variables.

Create k8s/secret.yaml:

apiVersion: v1

kind: Secret

metadata:

name: summarizer-secrets

namespace: summarizer-agent

type: Opaque

stringData:

S3_ENDPOINT: "https://objectstore.lon1.civo.com" # Change region as needed

S3_ACCESS_KEY: "your-civo-access-key-here"

S3_SECRET_KEY: "your-civo-secret-key-here"

S3_BUCKET: "incoming-docs"

RELAXAI_API_KEY: "your-relaxAI-api-key-here"

Create k8s/summarizer-deployment.yaml:

apiVersion: apps/v1

kind: Deployment

metadata:

name: summarizer

namespace: summarizer-agent

spec:

replicas: 1

selector:

matchLabels:

app: summarizer

template:

metadata:

labels:

app: summarizer

spec:

containers:

- name: summarizer

image: yourusername/civo-summarizer:latest

ports:

- containerPort: 8000

env:

- name: RELAXAI_API_KEY

valueFrom:

secretKeyRef:

name: summarizer-secrets

key: RELAXAI_API_KEY

resources:

requests:

memory: "2Gi"

cpu: "1000m"

nvidia.com/gpu: "1"

limits:

memory: "4Gi"

cpu: "2000m"

nvidia.com/gpu: "1"

livenessProbe:

httpGet:

path: /health

port: 8000

initialDelaySeconds: 30

periodSeconds: 10

readinessProbe:

httpGet:

path: /health

port: 8000

initialDelaySeconds: 10

periodSeconds: 5

nodeSelector:

node.kubernetes.io/instance-type: g4-g.4xlarge.kube.cluster # Adjust based on your GPU node type

---

apiVersion: v1

kind: Service

metadata:

name: summarizer-service

namespace: summarizer-agent

spec:

selector:

app: summarizer

ports:

- protocol: TCP

port: 8000

targetPort: 8000

type: ClusterIP

Create k8s/watcher-deployment.yaml:

apiVersion: apps/v1

kind: Deployment

metadata:

name: watcher

namespace: summarizer-agent

spec:

replicas: 1

selector:

matchLabels:

app: watcher

template:

metadata:

labels:

app: watcher

spec:

containers:

- name: watcher

image: yourusername/civo-watcher:latest

env:

- name: S3_ENDPOINT

valueFrom:

secretKeyRef:

name: summarizer-secrets

key: S3_ENDPOINT

- name: S3_ACCESS_KEY

valueFrom:

secretKeyRef:

name: summarizer-secrets

key: S3_ACCESS_KEY

- name: S3_SECRET_KEY

valueFrom:

secretKeyRef:

name: summarizer-secrets

key: S3_SECRET_KEY

- name: S3_BUCKET

valueFrom:

secretKeyRef:

name: summarizer-secrets

key: S3_BUCKET

- name: SUMMARIZER_URL

value: "http://summarizer-service:8000"

- name: POLL_INTERVAL

value: "10"

resources:

requests:

memory: "256Mi"

cpu: "100m"

limits:

memory: "512Mi"

cpu: "500m"

Deploy everything:

# Make sure you're in the right namespace

kubectl config set-context --current --namespace=summarizer-agent

# Apply secret (make sure you've edited it with real credentials!)

kubectl apply -f k8s/secret.yaml

# Deploy summarizer with GPU

kubectl apply -f k8s/summarizer-deployment.yaml

# Deploy watcher

kubectl apply -f k8s/watcher-deployment.yaml

# Check deployment status

kubectl get pods -n summarizer-agent

kubectl get services -n summarizer-agent

# Watch pod status

kubectl get pods -n summarizer-agent -w

# Check logs

kubectl logs -f deployment/summarizer -n summarizer-agent

kubectl logs -f deployment/watcher -n summarizer-agent

Step 7: End-to-end testing

Time to see your AI pipeline in action!

- Go to Civo Dashboard → Object Stores → incoming-docs → Browse Files

- Click Upload File

- Upload: Sample.pdf - A sample file containing the text content

- Watch logs:

kubectl logs -f deployment/watcher

- Refresh bucket — you will see:

Sample.pdf

sample.pdf.json

Your automated summarization pipeline works:

Troubleshooting

If the summaries aren't being generated:

# Check pod status

kubectl get pods -n summarizer-agent

# Check summarizer health

kubectl port-forward deployment/summarizer 8000:8000 -n summarizer-agent

curl http://localhost:8000/health

# Check watcher logs for errors

kubectl logs deployment/watcher -n summarizer-agent | grep -i error

Summary

This tutorial demonstrates how to build a production-ready AI automation pipeline without the complexity typically associated with enterprise AI platforms. Using Civo’s GPU-powered Kubernetes, GPU resources are provisioned in minutes, enabling fast experimentation and scalable production workflows without long lead times or operational overhead.

By combining Object Store, lightweight Kubernetes pods, and a simple polling architecture with relaxAI for inference, the pipeline remains efficient, cost-effective, and easy to operate at scale. The result is a practical blueprint for AI automation that can grow from small experiments to high-volume document processing, proving that modern AI pipelines can be both powerful and straightforward when built on the right foundation.

Additional resources

If you are interested in learning more about this topic, check out some of these resources: