We are in mid-2025, and teams across industries are rolling out large language models, or LLMs, to power everything from conversational agents to document understanding. However, getting them to run smoothly in production… That’s still a challenge. A working model isn’t just about putting it in a container and tossing it into a Kubernetes cluster. The demands of real-world traffic, security audits, and tight budgets mean that every aspect of deployment, from provisioning GPU nodes to trimming model startup time, must be handled thoughtfully.

In this post, let's unpack the eight pillars that separate production‑grade LLM deployments from fragile proofs of concept. You’ll get practical insights into what matters, what trips teams up, and what you can do to improve. And since managing infrastructure isn’t why you got into AI, we’ll also look at how Civo AI helps take the pressure off with fast GPU provisioning, built-in observability, and a developer-friendly approach, so you can focus more on your model and less on fighting the YAMLs.

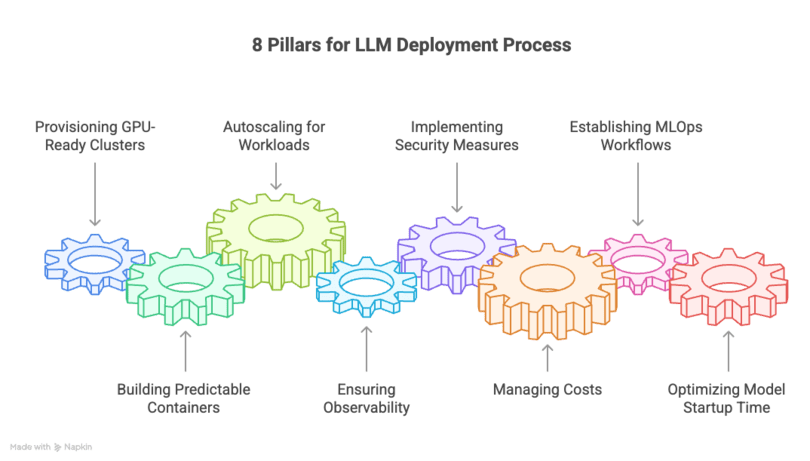

The 8 Pillars for LLM Deployment Process

1. Provisioning GPU-Ready Clusters

LLMs demand GPUs, if to be deployed for real-world use. Teams are leaning into high-performance options like NVIDIA A100s and H100s for their speed and tensor-core support. But the hard part isn’t getting access to a GPU, it’s getting everything else right - drivers, runtimes, device plugins, labels, taints, and machine types. Miss a step, and your pods sit in “pending,” waiting endlessly for compute.

Some teams now use hybrid setups, spot instances for daily loads, with reserved nodes for critical traffic. Others split GPU cards using NVIDIA MIG to run smaller models side by side. Kubernetes node-affinity and taints keep CPU-only workloads separate from GPU pods, preventing mis-scheduling. Whatever your setup, GPU provisioning shouldn’t be painful. A solid setup starts with automation. Define GPU clusters as code to avoid drift.

To simplify this whole process, Civo AI lets you request a GPU pool with a pre-installed NVIDIA device plugin in under two minutes. No custom DaemonSets. No vendor-specific YAML mysteries. Just pick your model size and go.

2. Building Predictable and Reproducible Containers

Building lean, reproducible containers is one of the easiest ways to avoid surprises in production. If your LLM image pulls nightly PyTorch builds or relies on whatever CUDA version is around, a silent failure is just a matter of time. Bloated images slow down cold starts and burn storage. Instead, modern teams are getting strict: pinning base images, CUDA versions, model weights, and even specific HuggingFace commits. Image security scanning tools like Trivy help surface vulnerabilities during CI. Keep layers clean - install dependencies first, then copy code and model files. A good layer strategy and smart caching make rebuilds faster, too. With some care, builds get faster, images stay small, and behavior stays predictable.

Here is a simple example Dockerfile for your reference:

FROM nvcr.io/nvidia/pytorch:25.04-py3

WORKDIR /app

COPY requirements.txt .

RUN python -m pip install --upgrade pip==24.0 setuptools==70.0 \

&& pip install --no-cache-dir -r requirements.txt

COPY src/ ./src

COPY model/ ./model

CMD ["python", "src/serve.py"]

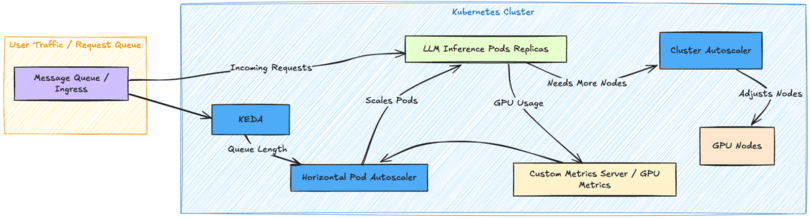

3. Autoscaling for Spiky Workloads

LLM workloads rarely behave predictably. You might see no traffic for hours, then a sudden flood of requests. Fixed replicas or static clusters either waste resources or buckle under pressure. This is where smart autoscaling steps in. Most teams rely on the Horizontal Pod Autoscaler, or HPA, which scales based on GPU utilization or queue length. GPU metrics combined with a custom metrics server give better visibility. Modern teams prefer queue-based scaling because GPU usage tends to spike during inference.

Meanwhile, the Cluster Autoscaler adjusts node counts behind the scenes, bringing in GPUs only when needed. For longer-running services, Vertical Pod Autoscaler, or VPA, helps fine-tune resource requests based on actual usage history. Civo AI streamlines this with built-in HPA and autoscaler settings tailored for GPU workloads.

4. Observability from End to End

Running LLMs in production without observability is like driving at night with your headlights off. If GPU memory maxes out or inference latency spikes, you won’t know until users start complaining. That’s why teams now treat visibility as a first-class requirement.

In 2025, observability means tracking GPU metrics, pod health, and cold starts in real time. Logs need correlation IDs to follow requests across services, and tracing tools help pinpoint slowdowns in preprocessing, inference, or postprocessing. Some teams go further, logging every prompt and response to catch quality drift before it hits users. If you can’t answer “what’s wrong with the model right now?” in 30 seconds, you’re not ready for scale.

Civo AI offers one-click Prometheus, Grafana, and Kubernetes dashboards from the Kubernetes Marketplace, giving you alerts and metrics without extra setup.

5. Security and Compliance

LLM services often process sensitive data, from personal info to proprietary documents. If an endpoint is misconfigured, even a single token completion could leak something critical. That’s why securing inference workloads is non-negotiable.

Teams today isolate network traffic with Kubernetes Network Policies and encrypt everything using TLS and mutual TLS. Service-to-service calls, especially gRPC, must never go over plain text. Secrets like API keys or database credentials are managed through Vault or encrypted Kubernetes Secrets. Some even deploy LLMs inside gVisor or Kata Containers for extra runtime isolation.

Audit logs and strict RBAC help track access and prevent privilege creep. Tools like OPA or Kyverno enforce policy-as-code guardrails automatically, blocking unauthenticated pods or unencrypted connections.

6. Cost Predictability

Serving LLMs at scale isn’t cheap. Runaway autoscaling or idle GPU clusters can quickly rack up costs if you’re not careful. A large model with tight latency needs will burn through GPU hours fast, without clear visibility, you’ll find out when the invoice hits.

Smart teams in 2025 put guardrails in place early. That means tracking GPU usage by namespace or model version, setting spending alerts, and enforcing resource quotas to avoid one team hoarding the cluster. Spot instances are great for non-critical tasks, while on-demand is reserved for production paths. Many also schedule automatic shutdowns of dev clusters after hours to cut waste.

Civo AI helps by offering transparent billing insights, and one-click spot instance pools, so you can scale your LLMs without blowing the budget.

7. MLOps workflows

Shipping LLM updates without a proper pipeline is a recipe for chaos, manual scripts, lost experiments, and no traceability. In 2025, mature teams treat training like software: version everything (code, data, hyperparameters), automate with orchestration tools like Argo Workflows or Kubeflow, and promote changes through Git.

Modern LLM pipelines ingest fresh data, retrain, evaluate, and package models automatically, rolling them out only if they meet accuracy or fairness thresholds. Canary testing and rollback are standard, especially when fine-tuning on user feedback or logs. This isn’t a “train once, deploy forever” world. You need automation to monitor for drift and update safely.

Civo AI supports GitOps workflows with managed Kubeflow and native ArgoCD integrations, so you can manage pipelines declaratively, test changes in dev, and promote to prod with confidence.

8. Model Startup Time

Cold starts kill the user experience, no one wants to wait 12 seconds for a chatbot to wake up. Inference latency adds up fast, especially under bursty loads. In 2025, smart teams combat this with a mix of model optimization and warm-start tactics.

Quantized models, TensorRT engines, and pruned weights shrink memory and speed up inference. Instead of loading PyTorch checkpoints at runtime, pods mount prebuilt models from fast SSDs or use ONNX/TensorRT graphs bundled with the container.

Some keep a warm pool of idle pods running dummy inferences to stay hot. Others use ReadWriteMany PVCs or shared memory caches like Redis for weight preloading.

Cold starts today are solved with:

- Compiled models, not raw checkpoints

- Pre-mounted volumes with preloaded weights

- Warm pods behind low-RPS proxies

- Pre built images for speed

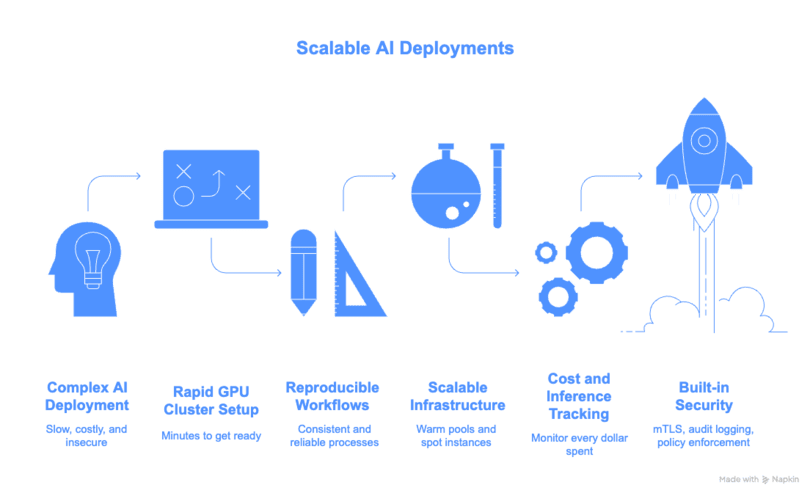

Getting started with Civo AI

Civo AI isn’t just another place to run your LLMs. It’s your deployment co-pilot that helps you get a GPU cluster ready in minutes, build reproducible workflows, scale with warm pools and spot instances, and track every dollar and inference from day one.

Whether you're fine-tuning Mistral for customer support, running open-weight models on domain data, or building agents with RAG, Civo AI gives you the control, observability, and reliability you need to take it to production.

Security and cost management are built in. From mTLS and audit logging to policy enforcement and secrets handling, everything is covered out of the box.

You don’t need to be an infra expert. You just need a platform that gets out of the way and lets you ship faster.

No more cold start headaches, surprise invoices, or endless cluster tuning. With Civo AI, you move from trial-and-error to stable, scalable deployments that just work.

Summary

Deploying LLMs on Kubernetes in 2025 is more than just spinning up containers. It means making smart choices around GPU hardware, ensuring containers are reproducible, scaling cleanly with bursts in traffic, stitching logs and metrics together for clear visibility, securing endpoints, managing costs, automating the entire training-to-deployment cycle, and cutting startup delays wherever possible.

Engineering teams are moving beyond duct-tape DevOps toward infrastructure that is reproducible, observable, secure, and financially sustainable. These are no longer nice-to-haves. They’re the foundation for reliable, production-grade LLM services.

LLM infrastructure should empower innovation, not slow it down. By adopting these practices, and leveraging a platform like Civo AI that brings them together under one roof, teams can deliver fast, reliable, and cost-effective LLM services without reinventing the wheel. Your models get the resources they need, exactly when they need them, so you can focus on what matters most: building the next generation of AI experiences. If you're still debugging cold starts on the weekend, maybe it’s time for a better way.