If you are looking into the cloud-native world, then the chances of coming across the term “Kubernetes” is high. Kubernetes, also known as K8s, is an open-source system that has the primary responsibility of being a container orchestrator. This has quickly become a lifeline for managing our containerized applications through creating automated deployments. It has been commonly used by cloud-native developers as the main API to support building and deploying reliable and scalable distribution systems.

Throughout this article, we will begin to understand what K3s is, including its architecture, setup, and uses. This will outline how you can get started using K3s and launch your first cluster in under 90 seconds.

What is K3s?

Over the years, Kubernetes has been developed to allow users a quicker, simpler and more cost effective way to launch clusters. In 2019, SUSE (formally Rancher Labs) launched ‘K3s’ to create a seamless version of Kubernetes which did not have the same time restraints when launching a cluster. By removing sections of the Kubernetes source code that were not typically required, Rancher Labs was able to create a single piece of binary that became a fully-functional distribution of Kubernetes.

Since being introduced, K3s has become a fully CNCF (Cloud Native Computing Foundation) certified Kubernetes offering meaning that you can write your YAML to operate against k8s and it will also apply against a K3s cluster. K3s is now known as a fully compatible and CNCF conformant Kubernetes distribution, packaged into a single piece of binary that includes everything you need while running Kubernetes. Through Civo Academy, you can learn more about the background to K3s in our “Kubernetes Introduction” module.

What does K3s stand for?

‘K8s’ represents Kubernetes which is a 10-letter word with 8-letters situated between the ‘K’ and ‘S’. As K3s is the simplified version of K8s, Rancher Labs designed the name to be ‘half as big’, with only 5-letters and 3-letters sitting between the ‘K’ and ‘S’.

Is K3s the same as Kubernetes?

Both K3s and Kubernetes can be classified as container orchestration tools which share a lot of the same features. K3s has some additional features that makes it more efficient than Kubernetes such as automatic manifestation of deployments, single node or master node installations, and better performance with large databases such as MySQL and PostgreSQL.

If you are interested in understanding how K3s is differs from k8s, check out our “K3s vs K8s” article which delves into these differences.

However if you're looking for a general overview of Kubernetes, consider reading through our comprehensive guide to kubernetes.

Who is able to use K3s?

Due to its small size and high availability, K3s is ideal for creating production-grade Kubernetes clusters in resource-constrained environments and remote locations. This makes it a perfect fit for edge computing and IoT devices like Raspberry Pi. Furthermore, continuous Integration automation processes favor K3s due to its lightweight nature and swift, straightforward deployment characteristics.

How does K3s work?

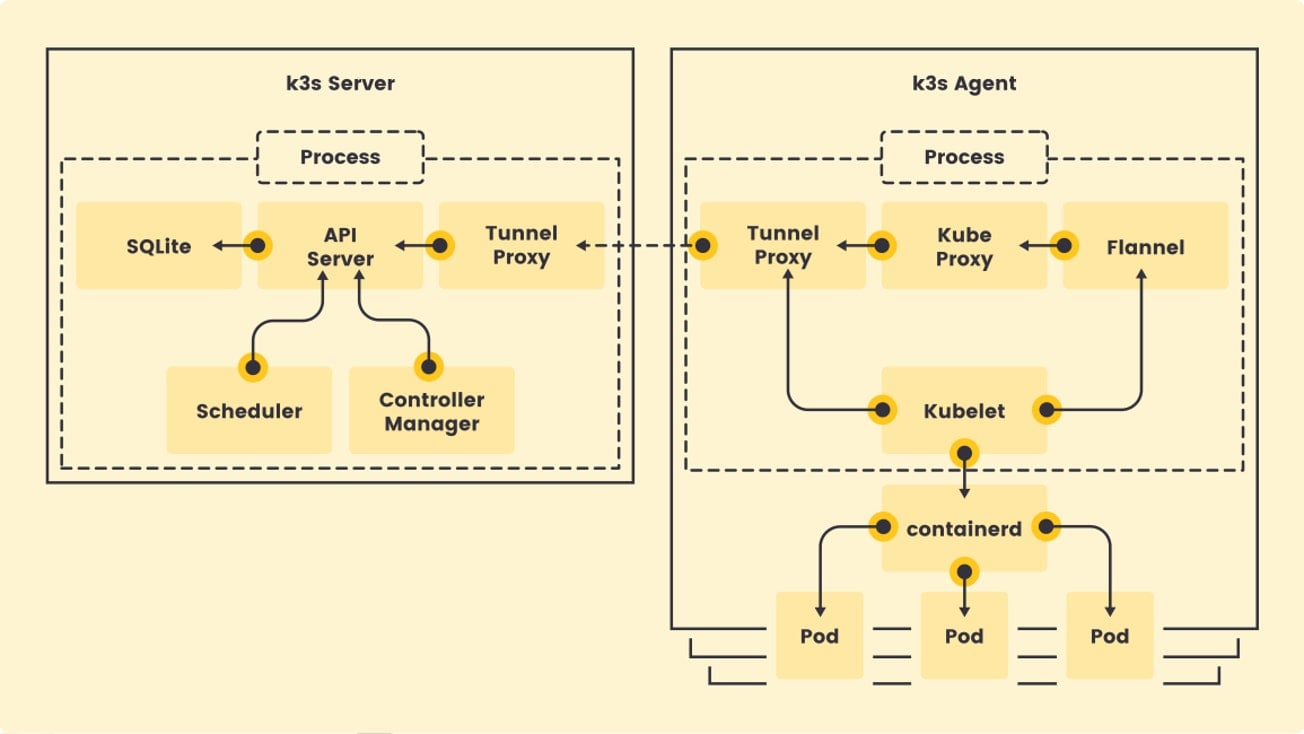

The foundation of K3s is rooted in simplicity for developers to get fully-fledged Kubernetes clusters running in a short amount of time. Like k8s, it operates using an API server, scheduler, and controller. However, in K3s, Kine replaces etcd for database storage. New additions to K3s include SQLite, Tunnel Proxy, and Flannel.All of the components in K3s run together as a single process,making it lightweight. This contrasts with K8s, where each component runs as a single process. This change in K3s allows for a swift creation of a single cluster in mere seconds, as both the server and the agent can run as a single process in a single node.

What is the K3s architecture?

K3s was designed to support two distinct processes: the server and the agent. The server allows k3 to run several components such as the basic control-plane components for Kubernetes (API, controller, and scheduler), SQLite, and the reverse tunnel proxy. The K3s agent on the other hand, handles the kubelet, kube-proxy, container runtime, load-balancers, and other additional components.

Diagram by Rancher in their Introduction to k3s blog

What are the benefits of K3s?

When created in 2019, K3s was designed to be the lightweight version of Kubernetes allowing users to seamlessly launch a cluster without the need for unused source code. This was built upon three main factors: lightweight, highly available, and automation:

| Feature | Description |

|---|---|

| Lightweight | K3s, designed as a single binary of less than 40MB, effectively implements the Kubernetes API. Its minimal resource requirements allow for running a cluster on machines with as little as 512MB of RAM. These properties typically enable the deployment of clusters with a few nodes in under two minutes. |

| Highly Available | Edge and IoT platforms have increasingly transformed the way containers are operated and managed. Given their limited resources, traditional Kubernetes can seem too bulky for these platforms. Rancher designed K3s to be lightweight, which simplifies installation and distribution across edge and IoT environments by eliminating superfluous lines of code. |

| Automation | Due to its speed and lightness, K3s greatly facilitates CI automation. Continuous Integration aids in reproducing production components and infrastructure on a smaller scale. CI automation greatly benefits from the rapid, lightweight, and straightforward characteristics exemplified by K3s. It can be installed in mere seconds with a single command and is effortlessly manageable as part of the CI automation process. |

How to get started using K3s?

Unlike deploying a cluster with k8s, which can take up to 10 minutes, you can start using K3s and have a working cluster deployed in under 90 seconds. To help you get started with K3s, check out our docs.

Use cases and scenarios

Experimentation and learning

Keep hearing about Kubernetes, or about a particular application like Linkerd? Want to have a quick play with a fully-functional cluster with the application set up alongside your cluster? Civo Kubernetes and our application marketplace will allow you to do just that, and allow you to launch a cluster with any number of applications within minutes, shortening the time to the fun stuff.

Or, are you completely new to Kubernetes, and don't want to spend time doing things The Hard Way, at least not yet? Spin up a cluster of your choice, keeping it bare-bones for understanding the inner workings of Kubernetes components, or watch your cluster with a tool like K9s while you deploy applications from the marketplace to see the changes they make, all within the time it takes you to eat a sandwich at lunch.

CI/CD pipelines

A useful real-world application of a K3s cluster is in the field of continuous integration / continuous delivery. Whether you want to build a Continuous Deployment (CD) pipeline using Argo to redeploy an application whenever a build passes tests or integrate Kubernetes into a project on GitLab, our Kubernetes offering is ideal.

Resilient hosting

If you just want to run a blog in a Kubernetes environment and make sure that all traffic to your domains is secured with a wildcard certificate our beta testing community has graciously contributed their knowledge and experience to let you do just that.

Application authoring

As K3s is fully Kubernetes compatible, we even have a guide to Helm 3 and using it to build a chart to deploy an express.js application. And best of all, you can be up and running with any of the above within minutes - and the knowledge is transferable to other Kubernetes distributions.

Beyond the development environment

Outside the developer space, Rancher has detailed industrial use cases for K3s, illustrating its capability to run in production-critical environments such as in monitoring thousands of sensors on an oil rig.

The advantage of using a managed Kubernetes service is that it takes the headache out of server configuration. You can concentrate on application development and rapid prototyping without having to worry about the underlying infrastructure or how it’s run. You can just get a working endpoint to the API with a public IP address within 120 seconds, along with pre-configured applications of your choice.

Our managed Kubernetes service is perfect for rapid prototyping, CI/CD runs and other developer scenarios where speed and performance (and cost) are critically important.

Next steps

If you are interested in hearing more about the future of K3s, our meetup with Kunal Kushwaha, Kai Hoffman, Dinesh Majrekar, and David Fogle dives deeper into this topic and answers your burning questions.

To get started learning more about K3s, why not join our K3s-powered Kubernetes service and have your first cluster deployed in under 90-seconds.

Additional resources

- Explore all of Civo's K3s tutorials for in-depth knowledge and hands-on experience with this lightweight Kubernetes distribution.

- Enroll in Civo Academy to learn everything you need to get started with Kubernetes, from the basics to more advanced concepts.

- Compare K3s vs K8s to understand their differences, strengths, and weaknesses, and decide which one better suits your needs.

- Evaluate K3s vs Talos, a modern OS designed to be secure, immutable, and minimal, to see which offers the best solution for your Kubernetes environment.