Over time, Kubernetes has become a household name for container orchestration as organizations aim to streamline complex processes. With its rapidly growing popularity and convenient ecosystem, many organizations started using it to manage their applications and workloads. But what exactly is it, and how did it come into existence?

Kubernetes has revolutionized the art of modern software development and deployment. Its ability to automate aspects of application deployment and scale applications with ease made it gain a major share in the containerized application market. In this blog, we will learn all about Kubernetes, including the components of its architecture, how you can deploy an application using Kubernetes, and advanced topics, such as networking, security, operators, and storage.

What is Kubernetes?

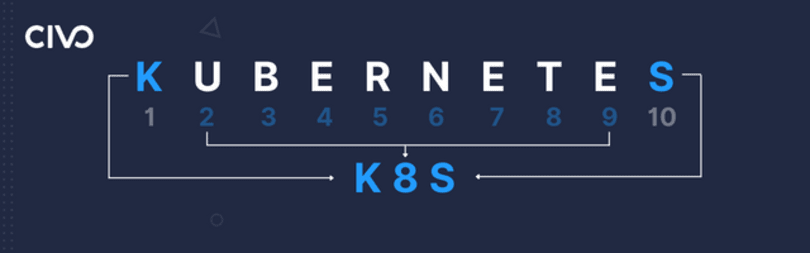

Kubernetes (also known as K8s) is an open source container orchestration tool through which you can automatically scale, deploy, and manage your containerized applications.

It offers load balancing and provides observability features through which the health of a deployed cluster can be monitored. In Kubernetes, you can also define the desired state of an application and the infrastructure required to run it through a declarative API. It also offers self-healing, meaning if one of the containers fails or stops working suddenly, Kubernetes redeploys the affected container to its desired state and restores the operations.

Overview of the Kubernetes architecture

The Kubernetes cluster consists of a set of nodes on physical or virtual machines that host applications in the form of containers. The architecture consists of a control plane and a set of worker nodes that run different processes. To understand the Kubernetes architecture, you need to know how the components of the control plane and worker nodes connect and interact with each other.

Source: Kubernetes Components

Control plane components

The control plane is responsible for managing the Kubernetes cluster. It stores information about the different nodes and plans the scheduling and monitoring of containers with the help of the control plane components:

| Component | Role |

|---|---|

| Kube-APIserver | Establishes connections between different components in the control plane and is the primary management component for Kubernetes. It orchestrates all operations within the cluster and exposes the Kubernetes API that is used by the external user to perform management operations. |

| Key-value store | A database management component that stores data in a key-value format (commonly etcd). It stores all the information related to the resources and objects running in the cluster. |

| Kube-scheduler | Closely watches the creation of pods and identifies the correct node for placing a container based on the container's resource requirements. It also looks at the capacity of the worker nodes and constraints in the form of taints, tolerations, and node affinity rules on them. |

| Controller manager | Represents a set of controllers packed into a single binary that reduces complexity and helps control the workloads across clusters. |

Worker node components

Just like the control plane, the worker nodes also have components that help connect them with the control plane and report the status of the containers in them:

| Component | Role |

|---|---|

| Kube-proxy | A process that runs on each node in the cluster and establishes communication between the different clusters and pods. Its job is to look for the creation of new services, and every time a new service is created, it creates the appropriate rules on each node to forward the traffic to those services to the backend pods. It enables the pods to communicate with other pods inside the cluster as well as outside of the cluster. |

| Kubelet | The main communication tool between the control plane and the worker nodes. It is an agent that runs on each node of the cluster and communicates with the Kube API-server in the control plane to update the status of the containers within the pods. |

The container runtime engine operates on both the control plane and the worker nodes and is responsible for managing containers. Typically, it is installed on the worker nodes, but if you plan to host the control plane components as containers, you'll need to install a container runtime engine.

Why you should use Kubernetes

Benefits of using Kubernetes

As a powerful container orchestration tool, using Kubernetes can give you several advantages. Some of them are listed below:

| Features | Benefits |

|---|---|

| Automation | Automate the scaling, deployment, and management of applications, streamlining tasks for developers and increasing application efficiency |

| Availability | High availability for applications by allowing them to run on multiple nodes and providing self-healing capabilities to ensure continuous operation even in the event of container failure |

| Ecosystem | Large ecosystem of extensions, plugins, and tools that enable efficient application management and deployment, including logging, monitoring, security, and issue resolution |

| Load balancing | Automatic load balancing that directs traffic to available replicas, ensuring continuous operation even if one replica fails or is removed, and distributes traffic evenly to prevent overload on any single replica |

| Portability | Seamless application deployment across on-premise and cloud environments, allowing you to easily move applications from on-premise infrastructure to a cloud provider (and vice-versa) without making significant changes |

| Resource management | Monitors applications helping to manage resources and optimize resource usability by automatically scheduling containers against the available resources, ensuring the application runs smoothly |

| Scalability | Enables automatic scaling of applications based on business needs through declarative configuration where users can set the number of application replicas to run |

Explore more about the benefits associated with Kubernetes in our report here.

How does Kubernetes compare to other container orchestration tools?

While Kubernetes is a popular tool for container orchestration, it is not the only one in the market. Tools such as Docker Swarm and Apache Mesos have some differences from Kubernetes. Below are some of the parameters which show the difference between Kubernetes, Docker Swarm, and Apache Mesos:

| Features | Kubernetes | Docker Swarm | Apache Mesos |

|---|---|---|---|

| Containers | Allows containers to run in physical on-premise infrastructure and virtual machines | Allows Docker containers to run across virtual machines | Allows containers to run both on the physical and virtual machines |

| Container runtime | Must install a container runtime engine such as containerd and CRI-O, to run and manage a container | No need to install a container runtime to orchestrate containers | Provides native support to launch containers with Docker and AppC container images |

| Production | Runs across a cluster | Runs on a single node and serves containers from a single host | Runs across a cluster |

| Monitoring | Components are available to monitor your cluster helping you to track elements such as the uptime of your application and utilization of your resources | No in-built monitoring capability, meaning third-party tools are required to install to enable the monitoring of the clusters | Mesos master and agent nodes have an inherent monitoring capability that enables them to report a range of statistics. These statistics help to monitor resource usage and detect abnormal situations early on. |

| Load balancing | Manual set up of a load balancer needed to distribute network traffic among various instances | Possesses automated load-balancing capabilities that help balance incoming traffic and requests across containers within a cluster | Requires manual setup that can be automated with the help of frameworks and tools that provide in-built load balancing capabilities |

| Scaling | Automatically scale your resources according to the business needs | Manually scale your resources whenever there is a requirement | Supports manual and automatic scaling. |

You can learn more about how Kubernetes differs from Docker in our blog here.

How to use Kubernetes

How to create a Kubernetes cluster

A Kubernetes cluster can be created locally or in a cloud environment. To create a cluster locally on your PC, you can use Minikube. You need to install the kubectl command line tool for running commands. After installing Minikube from the official minikube documentation page, use the minikube start command to start the cluster.

In cloud server cases, you can create a multi-node cluster using the Civo dashboard which requires manual set up for kubeadm and containerd options. You will need to declare the region, nodes, and resources by clicking on the create cluster button, and you'll get a full production cluster within seconds. Discover more about creating a multi-node cluster with the help of kubeadm and containerd from this Civo Academy lecture.

You can also create a cluster through the Civo Command Line Interface (CLI) and by configuring the Civo Terraform provider. Refer to the Civo official documentation to get a detailed overview of creating a cluster with the help of the Civo dashboard, CLI, or terraform.

How to deploy applications using Kubernetes

When deploying applications in Kubernetes, the required resources are declared by specifying their types. These resources can be in the form of Kubernetes manifests (written in YAML), or bundled together in Helm charts. After the resources are applied to the cluster, the Kubernetes API fetches the container images specified and builds the application according to the specifications.

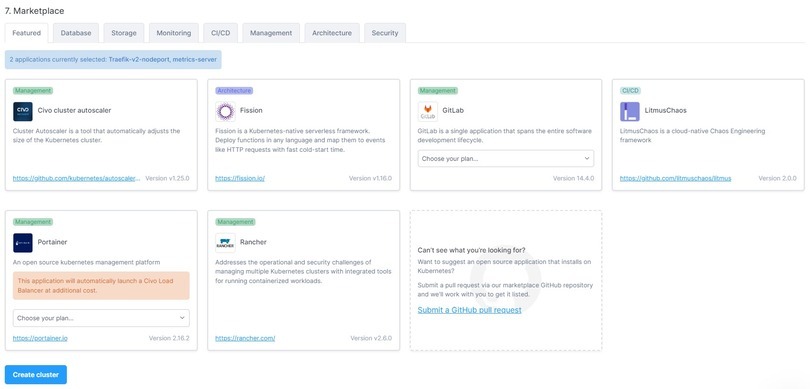

With the help of Civo Kubernetes, you can deploy an application from the wide range available in the Civo Marketplace. While creating a cluster, you will find the marketplace section, which contains applications available for installation on the cluster.

A detailed tutorial on how you can deploy an application with the help of Civo Marketplace can be found here.

How to manage applications in Kubernetes

Managing an application in Kubernetes can be done by scaling and updating resources, monitoring logs, rolling out updates, etc. Let’s look at some of the ways you can manage your applications in Kubernetes:

| Features | Description |

|---|---|

| Application configuration | Kubernetes helps you update application configuration with the help of ConfigMaps and Secrets. Both of these objects will help you store configuration data away from the application code separately. You can update these data independently based on your demands. |

| Application networking | You can manage your application networking with the help of Kubernetes. It provides tools along with Kubernetes Services and Ingresses. Kubernetes Services helps in exposing your application to the internet. At the same time, you can use Ingresses as a load balancer by routing the traffic to different parts of your application based on URL paths. |

| Application storage | Kubernetes helps manage your application storage and provides support for persistent storage. Persistent storage allows the storing of data and retains it even if the container gets shut down or restarted. Dynamic storage provisioning can be done through StorageClasses, or you can create PersistentVolumes or PersistentVolumeClaims to manage storage manually. |

| Monitoring applications | Kubernetes provides tools with which you can monitor the health and performance of your application. This can be done by monitoring the logs and metrics generated from the application. You can view logs from the Kubernetes dashboard or by applying the command `kubectl logs` in the Kubernetes CLI. Third-party tools like Prometheus and Grafana are also supported by Kubernetes to analyze your application performance and troubleshoot issues when required. |

| Scaling applications | Kubernetes can scale your application resources based on your needs. It supports both manual and automatic scaling. You can use the `kubectl scale` command to scale up or down your application replicas. On the other hand, you can automate scaling based on the utilization of your resources using the Horizontal Pod Autoscaler (HPA). With Civo Kubernetes, you can scale applications automatically with the help of Civo cluster autoscaler. The Civo cluster autoscaler can be added to any running Civo Kubernetes cluster, which you can find in the management section of the Civo marketplace. Learn how to use the autoscaler from our detailed tutorial here. |

| Updating applications | Kubernetes helps update applications without downtime through the rolling update strategy. It is the default deployment strategy in Kubernetes. You can update the container image of your application or the deployment configuration, and Kubernetes will gradually replace the existing pod with a new one. |

Advanced Kubernetes topics

Networking and service discovery

Networking is an integral part of Kubernetes. It helps in making communications between closely connected containers. It also helps establish connections between different pods and between pods and services. Networking also helps establish connections between pods outside of the cluster and services within a cluster and vice versa.

Services in Kubernetes help in distributing traffic among multiple pods and help in scaling applications. Service discovery is implemented in Kubernetes by means of the Kubernetes services, which provide a stable IP address and DNS name for a set of pods.

Learn more about Networking and Service discovery from our range of guided tutorials here.

Storage and data management

Kubernetes provides several storage options to store and manage data. Volumes are Kubernetes objects that help with data storage within the same pod or containers, and they are compatible with various storage solutions, such as cloud storage and local disks. There are Persistent Volumes that help with the dynamic provisioning of storage and make it available for applications. They decouple storage from pods and allow administrators to manage storage independently.

Persistent Volume Claims or PVCs, are storage resource requests made by pods, and then they are bound to available Persistent Volumes to provide the requested storage.

Security and access control

Kubernetes offers multiple measures for ensuring security and access control when properly put in place. The platform can ensure that only authorized users can access its cluster resources, with authentication mechanisms such as client certificates, bearer tokens, and user credentials used to validate the identity of any user or application attempting to access these resources. Once a user or application has been authenticated, Kubernetes performs authorization checks to confirm whether they are authorized to perform the requested operation. This two-step security process ensures that Kubernetes maintains the highest level of security for its users and their resources.

Extensions and custom resources

Kubernetes extensions are additional features that are not included in the core Kubernetes API, but are instead created by members of the Kubernetes community or third-party vendors. Some of the popular extensions include Helm, which is a package manager for Kubernetes, Istio, a service mesh used for traffic management, Prometheus, a tool for monitoring Kubernetes, and many others.

Custom resources allow users to define their own API objects in Kubernetes and create resources such as controllers, operators, and APIs specific to their application for managing and automating deployment and scaling. The defining of these resources takes place through the Kubernetes API extensions mechanism, and the resources can be created by the CustomResourceDefinition API object.

Operators and automation

Kubernetes operators are a set of patterns that are built on top of the Kubernetes API. They are used to build and deploy applications, and they help to automate complex tasks. They are software extensions and use custom resources to define the state of the application along with its dependencies. Operators automate the deploying, scaling, and managing of applications by encapsulating operational knowledge into software. This helps developers in building applications and focus on application logic while the operator manages the application. Other than automation, operators also perform self-healing, upgrades, rollbacks, and versioning, providing a consistent deployment process.

Summary

As Kubernetes continues to evolve and grow in popularity, it is essential for software developers and organizations to stay up-to-date with its latest developments and advancements. Standing as one of the most popular container orchestration tools in the cloud-native market, it automates the deployment and management of containerized applications.

This blog has outlined how in addition to its automation capabilities, Kubernetes can provide automatic scaling, ensuring high availability of applications and self-healing capabilities. Its extensive ecosystem promotes portability, allowing for seamless movement of applications between on-premise and cloud environments. Furthermore, Kubernetes offers load-balancing, enhancing application stability.

If you’re still looking to learn more about Kubernetes, check out some of these resources: