Security in the cloud has become an increasingly important topic over the years, with the move to more managed services, additional trust is being handed over to cloud providers. With this being said, we must begin to pay closer attention to the security surrounding cloud computing, especially when it comes to Kubernetes.

Join me as I explore the concept of confidential computing and a new use case we at Civo have been working on related to the Kubernetes control plane in managed clusters.

Why modern security solutions are non-negotiable

As technology advances and the demand for more efficient web solutions increases, the industry is shifting towards a no-code approach where developers and businesses can create and deploy applications with little to no coding experience. However, despite this shift, Kubernetes will continue to play a crucial role in the background of many cloud-based companies.

Kubernetes has emerged as the future of cloud computing, and its popularity has skyrocketed over the years. It provides a platform for automating, deploying, and scaling containerized applications, making it an essential tool for managing complex infrastructure. SaaS providers, in particular, have recognized the power of Kubernetes and are leveraging it to power their software under the hood.

Running Kubernetes and running infrastructure on top of Kubernetes are two distinct challenges. As a result, most businesses don’t get much value from running Kubernetes themselves, resulting in a move towards managed Kubernetes clusters. However, recent security breaches have highlighted the risks associated with relying on almost vendor-managed components. In some cases, insecure components have been running within the managed clusters, leading to data breaches and other security vulnerabilities. Unfortunately, as end-users, we often lack visibility into these issues, as the managed components are effectively a black box.

Introducing the K8s control plane

This is where technology from Intel called SGX comes into play.

SGX stands for Software Guard Extensions and allows data isolation at the CPU level.

With SGX, when a piece of code starts running, a cryptographic key is created for the program and the data used to load that so any config files. These are then given to the CPU, which creates an isolated bit of memory that only the CPU with that key has access to read and write from that area of memory. This is called an enclave and provides more security around what is running in that program. Not only is the data protected at runtime, but we can also externally attest that the code and config in that enclave is valid at any point. We can do this as an end-user on the system directly or remotely, which is called attestation.

SGX enclaves offer a solution to two significant issues that currently exist with control planes: breach vulnerability and lack of code verification. By implementing enclaves, cloud providers can better ensure the security and integrity of their control planes, giving end-users greater peace of mind and confidence in their cloud services.

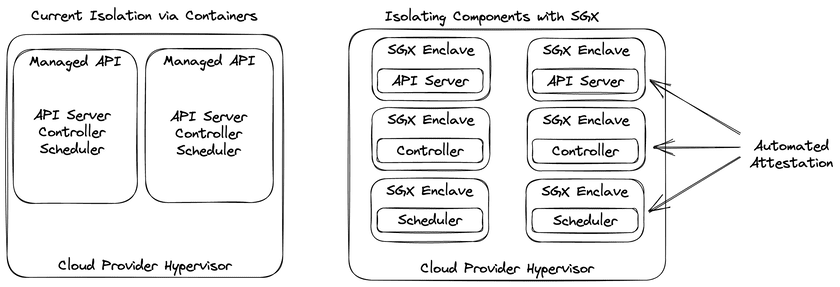

What SGX allows us to do is move these components from traditional isolation solutions such as containerisation, into enclaves. Once in an enclave we can continuously attest that the control plane components such as the kubernetes API server is the same version we initially started.

The image above compares the traditional approach of running everything within a container (on the left) and a new approach where each customer has their own API servers and controller running within individual enclaves, providing them with their own attestation capabilities (on the right).

Securing Kubernetes API with Intel SGX demo

In the video tutorial below, I will demonstrate how we can secure Kubernetes API with Intel SGX by spinning up a new server using Kubeadm, then moving the API components of the controller, scheduler, and manager over into an enclave. You will then see that the kubectl behaves as expected, allowing us to spin up new components.

The demo uses a library OS from the Occlum project to wrap the API components. The allows for pre-existing code to be run within an enclave and benefit from its built-in features, such as generating the necessary signing keys for cryptographic verification when the code is loaded.

What to expect next

Undoubtedly, there is still a lot of work to get a wider adoption of this. However, we are committed to working towards a range of different goals, including:

- Working with upstream SIGs to support the changes we’ve made to the Kubernetes components

- Deploying other managed components such as kubelet within an enclave on all nodes in the managed cluster.

- Provide customers with visibility of this automated attestation and allow customers to take policy-based actions based on the outcomes of attestation checks. For example, a node that fails attestation could be automatically removed from a cluster.

- Move more system components into enclaves with our vision being able to have the entire OS running within an enclave.

For more information surrounding Kubernetes security, our team has covered a range of common problems found here. If you have any other questions, reach out to us on Twitter or our community slack channel.